Embracing Docker for Development Efficiency

Innovative software development often involves creating isolated environments to test new code. Docker simplifies this process by allowing developers to package applications with all the necessary components such as libraries and dependencies, and ships the entire package—called a container—as one. In essence, Docker is a pivotal tool for developers seeking both efficiency and the assurance of consistent performance across multiple platforms.

Docker’s containers operate using shared system resources without the need for full virtualization. This method is highly efficient, enabling multiple containers to run on the same machine and hardware without the overhead related to traditional virtual machines.

Understanding Containers and Images

Containers are instances of Docker images that can be executed with various isolated processes. An image is a packaged version of your application, which includes everything needed to run it: code, runtime, system tools, libraries, and settings. Docker containers are often compared to virtual machines but are more portable, more resource-friendly, and more dependent on the host operating system.

For developers, the importance of mastering Docker lies in its capability to manage software’s lifecycle, allowing testing, deployment, and scalability processes to happen swiftly and reliably regardless of the environment.

With Docker, it’s also easier to maintain and track versions of your application, manage tools, and involve multiple teams in the development process.

Getting Started with Docker

Getting started with Docker involves a few steps, including installation and running your first container. Docker has detailed documentation and an extensive community, which is especially helpful for new users. After installation, running a simple command like docker run hello-world helps validate that Docker is correctly installed and running on your system.

Practical Applications of Docker in Projects

Docker’s real power unlocks when you begin integrating it into your projects. You can start with simple tasks, like running a web server in a container, to more complex workflows involving microservices, data storage, and communication across multiple applications and databases.

Docker’s containerization technology enables you to build flexible, lightweight, and easily deployable applications. It’s a critical skill in modern software development, applicable from small-scale personal projects to large, cloud-based applications.

Conclusion

Docker’s versatility in handling project complexities, its footprint in the industry, and its growing community and resources make it a compelling choice for developers looking to streamline and solidify their workflow.

Frequently Asked Questions

- What is Docker?

Docker is a platform as a service (PaaS) that uses OS-level virtualization to deliver software in packages called containers. - Why should I use Docker?

Docker simplifies configuration, increases your productivity, facilitates continuous integration and deployment, and enables your application to run in a variety of locations. - How is Docker different from virtual machines?

Docker containers are more lightweight because they don’t need the extra load of a hypervisor since they run directly within the host machine’s kernel. - Can Docker be used for developing any type of application?

Yes, Docker can be used to containerize backend services, data stores, and frontend applications. - What are Docker images and containers?

A Docker image is a blueprint for a Docker container. It contains everything an application needs to run. A Docker container is a runtime instance of an image. - Is Docker suitable for production environments?

Absolutely, Docker is designed to be safe and efficient for both development and production environments.

Ensuring your system meets the prerequisites for software installation is paramount to a successful setup. Thus, when gearing up to get Docker Desktop running, a couple of checkpoints must be considered.

Pre-Installation Checklist

Before diving into the installation process, we must ensure our system is fully prepared to host Docker Desktop. This entails enabling key features and configurations that Docker relies on.

- Virtual Machine Platform: Navigate to your system settings and verify that the Virtual Machine Platform is activated. This is essential for Docker to function efficiently.

- BIOS Virtualization: A quick glance at the Task Manager under the ‘CPU’ tab will reveal if virtualization is enabled. If not, a BIOS tweak might be necessary – which requires a bit of research, as the steps vary by manufacturer.

Smooth Sailing Installation

With the prerequisites out of the way, we shift our focus to the actual installation of Docker Desktop. It’s a straightforward process: download the installer, run it, agree to the defaults, and let it work its magic.

Post-installation, running Docker Desktop will prompt you for authentication. Take your pick from creating an account or using existing credentials from Google or GitHub. Once authenticated, you’re ready to start your Docker journey.

Following these steps should result in a hassle-free installation. However, should any issues arise, remember – solutions are often just a Google search away. Persistence is key in the world of software development.

The Role of a Dockerfile

With Docker Desktop now a part of your system, it’s time to tackle Dockerfiles. Dockerfiles are akin to blueprints – they contain a set of instructions that Docker uses to build images. These custom images are the foundation for spinning up Docker containers.

Creating your very own Dockerfile mandates a bachelor’s degree in clarity and precision in your instructions. It starts with selecting a base image and proceeds with defining steps like setting the working directory, copying necessary files, installing dependencies, and specifying network configurations and start-up commands.

Consider a Dockerfile as a master chef’s secret recipe, where the perfect blend of commands, file movements, and configurations result in a delectable Docker image ready for deployment.

Did you know?

Did you know that Docker can dramatically simplify the process of setting up consistent development environments, reducing the “it works on my machine” syndrome? By using Docker containers, teams can work in unified settings regardless of individual system differences.

Frequently Asked Questions

| Question | Answer |

|---|---|

| What is Docker Desktop? | Docker Desktop is an application for MacOS and Windows machines for building and sharing containerized applications and microservices. |

| Do I need to enable virtualization in BIOS for Docker? | Yes, virtualization must be enabled for Docker Desktop to work. It allows your system to run virtual machines, which is essential for Docker containers. |

| Can Docker Desktop be used for production? | Docker Desktop is primarily intended for development and testing purposes, not for production environments. |

| Is Docker Desktop free? | Docker Desktop is free for personal use and small businesses. Larger enterprises may require a paid subscription. |

| What is a Dockerfile? | A Dockerfile is a script containing commands that Docker uses to build an image. It defines the environment in which an application will run. |

| How does Docker help developers? | Docker standardizes the development environment, enabling developers to work on the same platform and eliminating the “it works on my machine” problem. |

In conclusion, Docker Desktop’s installation and configuration might seem daunting at first, but following the requisite steps and preparations ensures a smooth process. Leveraging Dockerfiles for building container images fosters consistent and maintainable development workflows, marking the true power of Docker in modern software development.

Understanding Docker Containerization for Python Projects

Docker has become an essential tool for developers seeking to containerize their Python environments. The process often begins with a Dockerfile that specifies the base image and all necessary commands to set up the environment. The python:3.11-slim-bookworm image offers a convenient starting point thanks to its lightweight nature and support for the latest Python features.

Essential Docker Commands and Linux Packages

The use of RUN commands in Docker allows for executing system-level commands such as package installations and environmental setups. Incorporating options like --no-install-recommends ensures that only essential packages are installed, keeping the image size minimal. Including utilities like software-properties-common and sudo further empowers users to manage the container’s environment effectively.

Configuring Users and Working Directories

It is a common practice to add a non-root user to the container for executing applications. This enhances security and mirrors a traditional development environment closely. Additionally, setting up the working directory to this user’s home directory ensures that the container remains organized and that permissions are correctly managed.

Setting up Environment Variables

Environment variables play a crucial role in containerized applications. Setting the PATH ensures that executables from the user’s local bin are accessible, and an API key variable can provide necessary credentials for external services like OpenAI.

Pre-Installing Python Packages

Pre-installing Python packages through the Dockerfile allows for the creation of a ready-to-go Python environment tailored to specific use cases. Packages such as numpy, pandas, and matplotlib are frequently included due to their popularity in data science and machine learning projects.

Exposing Ports and Running Applications

Exposing the right ports and defining the correct command to run an application are vital to making your Docker container accessible and functional. For tools like AutoGen Studio, setting up these configurations allows the software to serve its interface on a specified host and port.

FAQs About Python and Docker

- What is Docker and how does it benefit Python development?

- Why should one use official Python Docker images?

- How can environment variables be utilized in a Docker container?

- Which are the essential packages to install for a Python data science environment?

- What does exposing a port do in a Docker container?

- How do you run an application within a Docker container?

Conclusion

Docker containers offer a robust and consistent environment for Python development and deployment. By understanding Docker commands, environmental setup, and application configuration, developers can efficiently manage their development workflows.

Embarking on the journey of setting up AutoGen Studio within a Docker container can initially seem daunting, but with a step-by-step guide, it’s a feat that comes with great reward. AutoGen Studio is a cutting-edge interface designed to leverage the capabilities of OpenAI’s API, providing an intuitive environment for generating machine learning models, deploying AI solutions, and experimenting with various data science tasks.

Prerequisites for Installation

Before diving into the installation process, you’ll need to ensure that Docker Desktop is active on your system. Docker provides a virtual environment that simplifies the deployment of applications by containing all necessary components in a single package, or container. Whether on Windows, Mac, or Linux, Docker Desktop is an essential tool for developers looking to encapsulate their projects efficiently.

Step-by-Step Configuration

The first step involves building your Docker image, a template that contains the application and its dependencies. Using the Dockerfile.base as a blueprint, Docker constructs the image that will later be utilized to instantiate containers.

The construction of the Docker image is initiated with the build command, tagging the image for easier reference:

docker build -t autogenstudio -f Dockerfile.base .

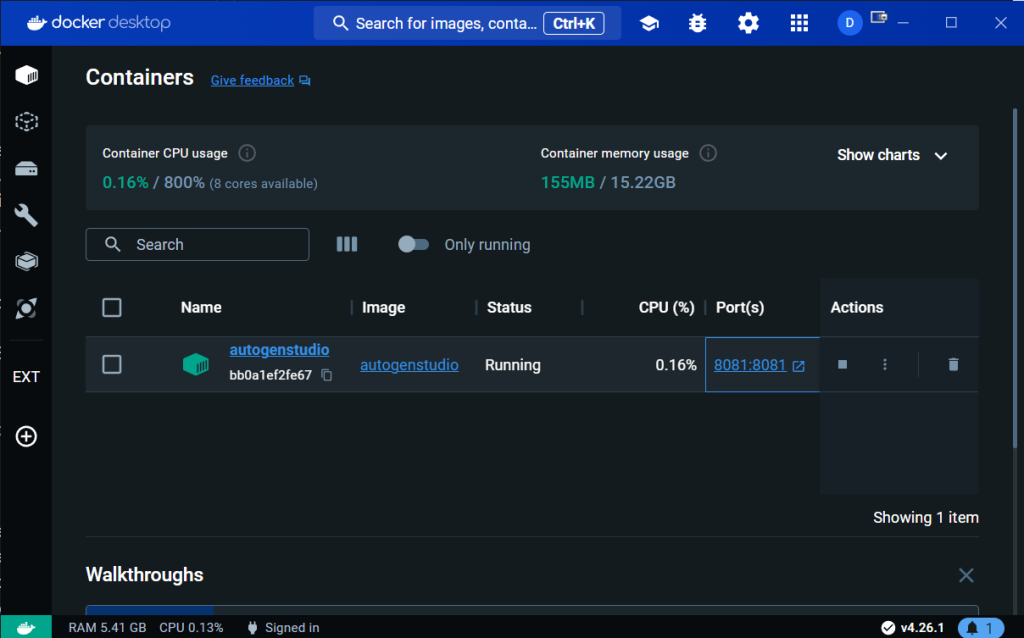

Launching Your Container

Following the creation of your Docker image, it’s time to breathe life into it by starting up the container. This is achieved through the following command:

docker run -it --rm -p 8081:8081 --name autogenstudio autogenstudio

This command instructs Docker to run the container in an interactive mode, ensuring that the terminal remains engaged with the container’s processes. It also maps the container’s port 8081 to the local machine’s port, allowing access through a web browser.

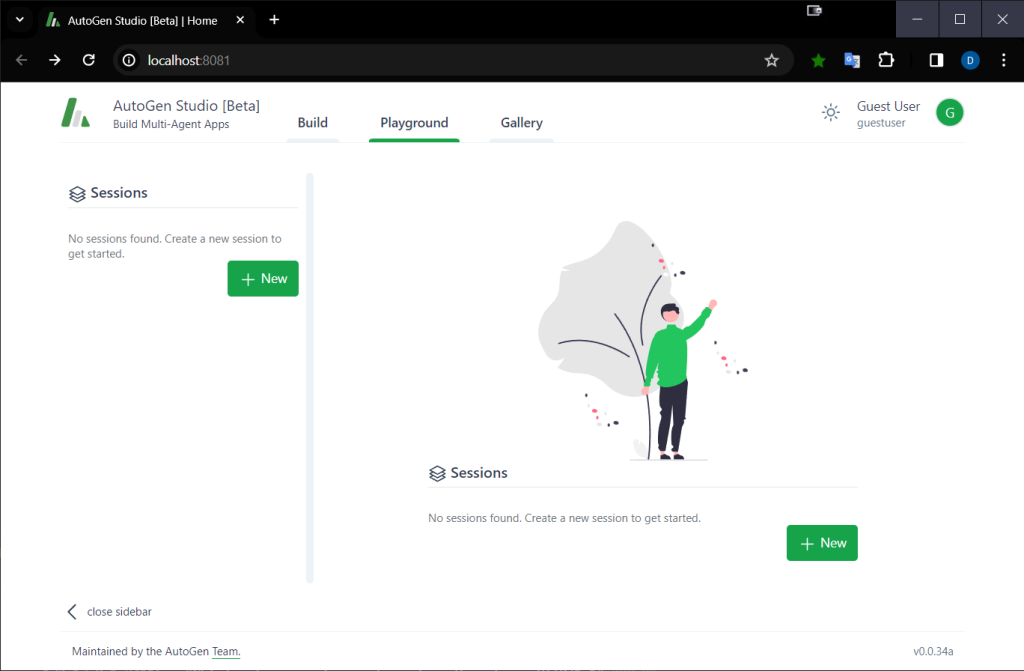

Accessing the AutoGen Studio is as simple as navigating to http://localhost:8081 on your preferred web browser, ensuring that you’re connecting to your local environment and not the Docker container’s internal network.

Securing Your API Key

It’s crucial to safeguard your OpenAI API key. While the setup process might involve hardcoding the key into the Dockerfile for simplicity, it’s imperative to keep this file confidential, or better yet, remove the key before sharing the file. As a security measure, always handle your API keys with care to avoid unauthorized usage and potential costs.

Congratulations! With the studio up and running within a Docker container, you’ve laid the groundwork for exploring the vast functionalities that AutoGen Studio offers. Ready to explore further? Stay tuned for the next steps in utilizing AutoGen Studio to its fullest potential.

Frequently Asked Questions (FAQs)

| What is AutoGen Studio? | AutoGen Studio is an interface for utilizing OpenAI’s API, catering to machine learning and data science tasks. |

| Do I need Docker to run AutoGen Studio? | While not strictly necessary, using Docker simplifies the setup and management of AutoGen Studio environments. |

| Can I access AutoGen Studio from my browser? | Yes, once the Docker container is running, you can access AutoGen Studio via http://localhost:8081. |

| Is it safe to hardcode my OpenAI API key in the Dockerfile? | For security reasons, it is not recommended. Always remove the API key from the Dockerfile before sharing, and keep it secure. |

| How do I build the Docker image? | Use the command docker build -t autogenstudio -f Dockerfile.base . in the terminal. |

| How can I ensure my Docker container is removed after stopping it? | The --rm flag in the run command ensures the container is automatically removed. |

0 Comments